BLEU Score¶

Module Interface¶

- class torchmetrics.text.BLEUScore(n_gram=4, smooth=False, weights=None, **kwargs)[source]¶

Calculate BLEU score of machine translated text with one or more references.

As input to

forwardandupdatethe metric accepts the following input:preds(Sequence): An iterable of machine translated corpustarget(Sequence): An iterable of iterables of reference corpus

As output of

forwardandupdatethe metric returns the following output:bleu(Tensor): A tensor with the BLEU Score

- Parameters:

smooth¶ (

bool) – Whether or not to apply smoothing, see Machine Translation Evolutionkwargs¶ (

Any) – Additional keyword arguments, see Advanced metric settings for more info.weights¶ (

Optional[Sequence[float]]) – Weights used for unigrams, bigrams, etc. to calculate BLEU score. If not provided, uniform weights are used.

- Raises:

ValueError – If a length of a list of weights is not

Noneand not equal ton_gram.

Example

>>> from torchmetrics.text import BLEUScore >>> preds = ['the cat is on the mat'] >>> target = [['there is a cat on the mat', 'a cat is on the mat']] >>> bleu = BLEUScore() >>> bleu(preds, target) tensor(0.7598)

- plot(val=None, ax=None)[source]¶

Plot a single or multiple values from the metric.

- Parameters:

val¶ (

Union[Tensor,Sequence[Tensor],None]) – Either a single result from calling metric.forward or metric.compute or a list of these results. If no value is provided, will automatically call metric.compute and plot that result.ax¶ (

Optional[Axes]) – An matplotlib axis object. If provided will add plot to that axis

- Return type:

- Returns:

Figure and Axes object

- Raises:

ModuleNotFoundError – If matplotlib is not installed

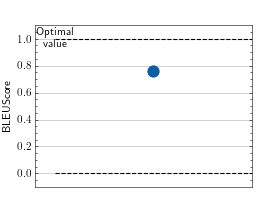

>>> # Example plotting a single value >>> from torchmetrics.text import BLEUScore >>> metric = BLEUScore() >>> preds = ['the cat is on the mat'] >>> target = [['there is a cat on the mat', 'a cat is on the mat']] >>> metric.update(preds, target) >>> fig_, ax_ = metric.plot()

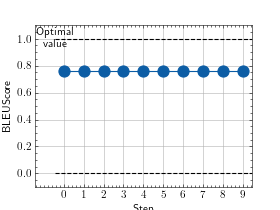

>>> # Example plotting multiple values >>> from torchmetrics.text import BLEUScore >>> metric = BLEUScore() >>> preds = ['the cat is on the mat'] >>> target = [['there is a cat on the mat', 'a cat is on the mat']] >>> values = [ ] >>> for _ in range(10): ... values.append(metric(preds, target)) >>> fig_, ax_ = metric.plot(values)

Functional Interface¶

- torchmetrics.functional.text.bleu_score(preds, target, n_gram=4, smooth=False, weights=None)[source]¶

Calculate BLEU score of machine translated text with one or more references.

- Parameters:

preds¶ (

Union[str,Sequence[str]]) – An iterable of machine translated corpustarget¶ (

Sequence[Union[str,Sequence[str]]]) – An iterable of iterables of reference corpusweights¶ (

Optional[Sequence[float]]) – Weights used for unigrams, bigrams, etc. to calculate BLEU score. If not provided, uniform weights are used.

- Return type:

- Returns:

Tensor with BLEU Score

- Raises:

ValueError – If

predsandtargetcorpus have different lengths.ValueError – If a length of a list of weights is not

Noneand not equal ton_gram.

Example

>>> from torchmetrics.functional.text import bleu_score >>> preds = ['the cat is on the mat'] >>> target = [['there is a cat on the mat', 'a cat is on the mat']] >>> bleu_score(preds, target) tensor(0.7598)

References

[1] BLEU: a Method for Automatic Evaluation of Machine Translation by Papineni, Kishore, Salim Roukos, Todd Ward, and Wei-Jing Zhu BLEU

[2] Automatic Evaluation of Machine Translation Quality Using Longest Common Subsequence and Skip-Bigram Statistics by Chin-Yew Lin and Franz Josef Och Machine Translation Evolution