Word Error Rate¶

Module Interface¶

- class torchmetrics.text.WordErrorRate(**kwargs)[source]¶

Word error rate (WordErrorRate) is a common metric of the performance of an automatic speech recognition.

This value indicates the percentage of words that were incorrectly predicted. The lower the value, the better the performance of the ASR system with a WER of 0 being a perfect score. Word error rate can then be computed as:

\[WER = \frac{S + D + I}{N} = \frac{S + D + I}{S + D + C}\]where: - \(S\) is the number of substitutions, - \(D\) is the number of deletions, - \(I\) is the number of insertions, - \(C\) is the number of correct words, - \(N\) is the number of words in the reference (\(N=S+D+C\)).

Compute WER score of transcribed segments against references.

As input to

forwardandupdatethe metric accepts the following input:preds(List): Transcription(s) to score as a string or list of stringstarget(List): Reference(s) for each speech input as a string or list of strings

As output of

forwardandcomputethe metric returns the following output:wer(Tensor): A tensor with the Word Error Rate score

- Parameters:

kwargs¶ (

Any) – Additional keyword arguments, see Advanced metric settings for more info.

Examples

>>> from torchmetrics.text import WordErrorRate >>> preds = ["this is the prediction", "there is an other sample"] >>> target = ["this is the reference", "there is another one"] >>> wer = WordErrorRate() >>> wer(preds, target) tensor(0.5000)

- plot(val=None, ax=None)[source]¶

Plot a single or multiple values from the metric.

- Parameters:

val¶ (

Union[Tensor,Sequence[Tensor],None]) – Either a single result from calling metric.forward or metric.compute or a list of these results. If no value is provided, will automatically call metric.compute and plot that result.ax¶ (

Optional[Axes]) – An matplotlib axis object. If provided will add plot to that axis

- Return type:

- Returns:

Figure and Axes object

- Raises:

ModuleNotFoundError – If matplotlib is not installed

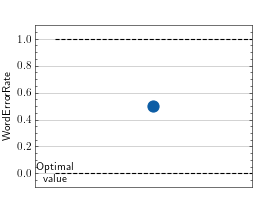

>>> # Example plotting a single value >>> from torchmetrics.text import WordErrorRate >>> metric = WordErrorRate() >>> preds = ["this is the prediction", "there is an other sample"] >>> target = ["this is the reference", "there is another one"] >>> metric.update(preds, target) >>> fig_, ax_ = metric.plot()

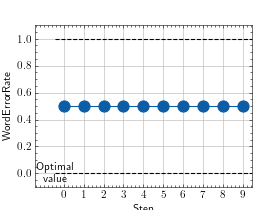

>>> # Example plotting multiple values >>> from torchmetrics.text import WordErrorRate >>> metric = WordErrorRate() >>> preds = ["this is the prediction", "there is an other sample"] >>> target = ["this is the reference", "there is another one"] >>> values = [ ] >>> for _ in range(10): ... values.append(metric(preds, target)) >>> fig_, ax_ = metric.plot(values)

Functional Interface¶

- torchmetrics.functional.text.word_error_rate(preds, target)[source]¶

Word error rate (WordErrorRate) is a common metric of performance of an automatic speech recognition system.

This value indicates the percentage of words that were incorrectly predicted. The lower the value, the better the performance of the ASR system with a WER of 0 being a perfect score.

- Parameters:

- Return type:

- Returns:

Word error rate score

Examples

>>> preds = ["this is the prediction", "there is an other sample"] >>> target = ["this is the reference", "there is another one"] >>> word_error_rate(preds=preds, target=target) tensor(0.5000)