Hinge Loss¶

Module Interface¶

- class torchmetrics.HingeLoss(squared=False, multiclass_mode=None, **kwargs)[source]

Note

From v0.10 an ‘binary_*’, ‘multiclass_*’, `’multilabel_*’ version now exist of each classification metric. Moving forward we recommend using these versions. This base metric will still work as it did prior to v0.10 until v0.11. From v0.11 the task argument introduced in this metric will be required and the general order of arguments may change, such that this metric will just function as an single entrypoint to calling the three specialized versions.

Computes the mean Hinge loss, typically used for Support Vector Machines (SVMs).

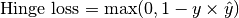

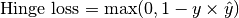

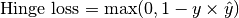

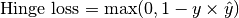

In the binary case it is defined as:

Where

is the target, and

is the target, and  is the prediction.

is the prediction.In the multi-class case, when

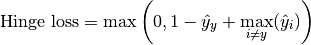

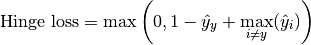

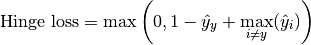

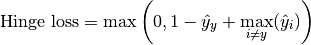

multiclass_mode=None(default),multiclass_mode=MulticlassMode.CRAMMER_SINGERormulticlass_mode="crammer-singer", this metric will compute the multi-class hinge loss defined by Crammer and Singer as:

Where

is the target class (where

is the target class (where  is the number of classes),

and

is the number of classes),

and  is the predicted output per class.

is the predicted output per class.In the multi-class case when

multiclass_mode=MulticlassMode.ONE_VS_ALLormulticlass_mode='one-vs-all', this metric will use a one-vs-all approach to compute the hinge loss, giving a vector of C outputs where each entry pits that class against all remaining classes.This metric can optionally output the mean of the squared hinge loss by setting

squared=TrueOnly accepts inputs with preds shape of (N) (binary) or (N, C) (multi-class) and target shape of (N).

- Parameters

squared¶ (

bool) – If True, this will compute the squared hinge loss. Otherwise, computes the regular hinge loss (default).multiclass_mode¶ (

Union[str,MulticlassMode,None]) – Which approach to use for multi-class inputs (has no effect in the binary case).None(default),MulticlassMode.CRAMMER_SINGERor"crammer-singer", uses the Crammer Singer multi-class hinge loss.MulticlassMode.ONE_VS_ALLor"one-vs-all"computes the hinge loss in a one-vs-all fashion.kwargs¶ (

Any) – Additional keyword arguments, see Advanced metric settings for more info.

- Raises

ValueError – If

multiclass_modeis not: None,MulticlassMode.CRAMMER_SINGER,"crammer-singer",MulticlassMode.ONE_VS_ALLor"one-vs-all".

- Example (binary case):

>>> import torch >>> from torchmetrics import HingeLoss >>> target = torch.tensor([0, 1, 1]) >>> preds = torch.tensor([-2.2, 2.4, 0.1]) >>> hinge = HingeLoss() >>> hinge(preds, target) tensor(0.3000)

- Example (default / multiclass case):

>>> target = torch.tensor([0, 1, 2]) >>> preds = torch.tensor([[-1.0, 0.9, 0.2], [0.5, -1.1, 0.8], [2.2, -0.5, 0.3]]) >>> hinge = HingeLoss() >>> hinge(preds, target) tensor(2.9000)

- Example (multiclass example, one vs all mode):

>>> target = torch.tensor([0, 1, 2]) >>> preds = torch.tensor([[-1.0, 0.9, 0.2], [0.5, -1.1, 0.8], [2.2, -0.5, 0.3]]) >>> hinge = HingeLoss(multiclass_mode="one-vs-all") >>> hinge(preds, target) tensor([2.2333, 1.5000, 1.2333])

Initializes internal Module state, shared by both nn.Module and ScriptModule.

- compute()[source]

Override this method to compute the final metric value from state variables synchronized across the distributed backend.

- Return type

BinaryHingeLoss¶

- class torchmetrics.classification.BinaryHingeLoss(squared=False, ignore_index=None, validate_args=True, **kwargs)[source]

Computes the mean Hinge loss typically used for Support Vector Machines (SVMs) for binary tasks. It is defined as:

Where

is the target, and

is the target, and  is the prediction.

is the prediction.Accepts the following input tensors:

preds(float tensor):(N, ...). Preds should be a tensor containing probabilities or logits for each observation. If preds has values outside [0,1] range we consider the input to be logits and will auto apply sigmoid per element.target(int tensor):(N, ...). Target should be a tensor containing ground truth labels, and therefore only contain {0,1} values (except if ignore_index is specified).

Additional dimension

...will be flattened into the batch dimension.- Parameters

squared¶ (

bool) – If True, this will compute the squared hinge loss. Otherwise, computes the regular hinge loss.ignore_index¶ (

Optional[int]) – Specifies a target value that is ignored and does not contribute to the metric calculationvalidate_args¶ (

bool) – bool indicating if input arguments and tensors should be validated for correctness. Set toFalsefor faster computations.kwargs¶ (

Any) – Additional keyword arguments, see Advanced metric settings for more info.

Example

>>> from torchmetrics.classification import BinaryHingeLoss >>> preds = torch.tensor([0.25, 0.25, 0.55, 0.75, 0.75]) >>> target = torch.tensor([0, 0, 1, 1, 1]) >>> metric = BinaryHingeLoss() >>> metric(preds, target) tensor(0.6900) >>> metric = BinaryHingeLoss(squared=True) >>> metric(preds, target) tensor(0.6905)

Initializes internal Module state, shared by both nn.Module and ScriptModule.

- compute()[source]

Override this method to compute the final metric value from state variables synchronized across the distributed backend.

- Return type

MulticlassHingeLoss¶

- class torchmetrics.classification.MulticlassHingeLoss(num_classes, squared=False, multiclass_mode='crammer-singer', ignore_index=None, validate_args=True, **kwargs)[source]

Computes the mean Hinge loss typically used for Support Vector Machines (SVMs) for multiclass tasks

The metric can be computed in two ways. Either, the definition by Crammer and Singer is used:

Where

is the target class (where

is the target class (where  is the number of classes),

and

is the number of classes),

and  is the predicted output per class. Alternatively, the metric can

also be computed in one-vs-all approach, where each class is valued against all other classes in a binary fashion.

is the predicted output per class. Alternatively, the metric can

also be computed in one-vs-all approach, where each class is valued against all other classes in a binary fashion.Accepts the following input tensors:

preds(float tensor):(N, C, ...). Preds should be a tensor containing probabilities or logits for each observation. If preds has values outside [0,1] range we consider the input to be logits and will auto apply softmax per sample.target(int tensor):(N, ...). Target should be a tensor containing ground truth labels, and therefore only contain values in the [0, n_classes-1] range (except if ignore_index is specified).

Additional dimension

...will be flattened into the batch dimension.- Parameters

num_classes¶ (

int) – Integer specifing the number of classessquared¶ (

bool) – If True, this will compute the squared hinge loss. Otherwise, computes the regular hinge loss.multiclass_mode¶ (

Literal[‘crammer-singer’, ‘one-vs-all’]) – Determines how to compute the metricignore_index¶ (

Optional[int]) – Specifies a target value that is ignored and does not contribute to the metric calculationvalidate_args¶ (

bool) – bool indicating if input arguments and tensors should be validated for correctness. Set toFalsefor faster computations.kwargs¶ (

Any) – Additional keyword arguments, see Advanced metric settings for more info.

Example

>>> from torchmetrics.classification import MulticlassHingeLoss >>> preds = torch.tensor([[0.25, 0.20, 0.55], ... [0.55, 0.05, 0.40], ... [0.10, 0.30, 0.60], ... [0.90, 0.05, 0.05]]) >>> target = torch.tensor([0, 1, 2, 0]) >>> metric = MulticlassHingeLoss(num_classes=3) >>> metric(preds, target) tensor(0.9125) >>> metric = MulticlassHingeLoss(num_classes=3, squared=True) >>> metric(preds, target) tensor(1.1131) >>> metric = MulticlassHingeLoss(num_classes=3, multiclass_mode='one-vs-all') >>> metric(preds, target) tensor([0.8750, 1.1250, 1.1000])

Initializes internal Module state, shared by both nn.Module and ScriptModule.

- compute()[source]

Override this method to compute the final metric value from state variables synchronized across the distributed backend.

- Return type

Functional Interface¶

- torchmetrics.functional.hinge_loss(preds, target, squared=False, multiclass_mode=None, task=None, num_classes=None, ignore_index=None, validate_args=True)[source]

Note

From v0.10 an ‘binary_*’, ‘multiclass_*’, `’multilabel_*’ version now exist of each classification metric. Moving forward we recommend using these versions. This base metric will still work as it did prior to v0.10 until v0.11. From v0.11 the task argument introduced in this metric will be required and the general order of arguments may change, such that this metric will just function as an single entrypoint to calling the three specialized versions.

Computes the mean Hinge loss typically used for Support Vector Machines (SVMs).

In the binary case it is defined as:

Where

is the target, and

is the target, and  is the prediction.

is the prediction.In the multi-class case, when

multiclass_mode=None(default),multiclass_mode=MulticlassMode.CRAMMER_SINGERormulticlass_mode="crammer-singer", this metric will compute the multi-class hinge loss defined by Crammer and Singer as:

Where

is the target class (where

is the target class (where  is the number of classes),

and

is the number of classes),

and  is the predicted output per class.

is the predicted output per class.In the multi-class case when

multiclass_mode=MulticlassMode.ONE_VS_ALLormulticlass_mode='one-vs-all', this metric will use a one-vs-all approach to compute the hinge loss, giving a vector of C outputs where each entry pits that class against all remaining classes.This metric can optionally output the mean of the squared hinge loss by setting

squared=TrueOnly accepts inputs with preds shape of (N) (binary) or (N, C) (multi-class) and target shape of (N).

- Parameters

preds¶ (

Tensor) – Predictions from model (as float outputs from decision function).squared¶ (

bool) – If True, this will compute the squared hinge loss. Otherwise, computes the regular hinge loss (default).multiclass_mode¶ (

Optional[Literal[‘crammer-singer’, ‘one-vs-all’]]) – Which approach to use for multi-class inputs (has no effect in the binary case).None(default),MulticlassMode.CRAMMER_SINGERor"crammer-singer", uses the Crammer Singer multi-class hinge loss.MulticlassMode.ONE_VS_ALLor"one-vs-all"computes the hinge loss in a one-vs-all fashion.

- Raises

ValueError – If preds shape is not of size (N) or (N, C).

ValueError – If target shape is not of size (N).

ValueError – If

multiclass_modeis not: None,MulticlassMode.CRAMMER_SINGER,"crammer-singer",MulticlassMode.ONE_VS_ALLor"one-vs-all".

- Example (binary case):

>>> import torch >>> from torchmetrics.functional import hinge_loss >>> target = torch.tensor([0, 1, 1]) >>> preds = torch.tensor([-2.2, 2.4, 0.1]) >>> hinge_loss(preds, target) tensor(0.3000)

- Example (default / multiclass case):

>>> target = torch.tensor([0, 1, 2]) >>> preds = torch.tensor([[-1.0, 0.9, 0.2], [0.5, -1.1, 0.8], [2.2, -0.5, 0.3]]) >>> hinge_loss(preds, target) tensor(2.9000)

- Example (multiclass example, one vs all mode):

>>> target = torch.tensor([0, 1, 2]) >>> preds = torch.tensor([[-1.0, 0.9, 0.2], [0.5, -1.1, 0.8], [2.2, -0.5, 0.3]]) >>> hinge_loss(preds, target, multiclass_mode="one-vs-all") tensor([2.2333, 1.5000, 1.2333])

- Return type

binary_hinge_loss¶

- torchmetrics.functional.classification.binary_hinge_loss(preds, target, squared=False, ignore_index=None, validate_args=False)[source]

Computes the mean Hinge loss typically used for Support Vector Machines (SVMs) for binary tasks. It is defined as:

Where

is the target, and

is the target, and  is the prediction.

is the prediction.Accepts the following input tensors:

preds(float tensor):(N, ...). Preds should be a tensor containing probabilities or logits for each observation. If preds has values outside [0,1] range we consider the input to be logits and will auto apply sigmoid per element.target(int tensor):(N, ...). Target should be a tensor containing ground truth labels, and therefore only contain {0,1} values (except if ignore_index is specified).

Additional dimension

...will be flattened into the batch dimension.- Parameters

squared¶ (

bool) – If True, this will compute the squared hinge loss. Otherwise, computes the regular hinge loss.ignore_index¶ (

Optional[int]) – Specifies a target value that is ignored and does not contribute to the metric calculationvalidate_args¶ (

bool) – bool indicating if input arguments and tensors should be validated for correctness. Set toFalsefor faster computations.

Example

>>> from torchmetrics.functional.classification import binary_hinge_loss >>> preds = torch.tensor([0.25, 0.25, 0.55, 0.75, 0.75]) >>> target = torch.tensor([0, 0, 1, 1, 1]) >>> binary_hinge_loss(preds, target) tensor(0.6900) >>> binary_hinge_loss(preds, target, squared=True) tensor(0.6905)

- Return type

multiclass_hinge_loss¶

- torchmetrics.functional.classification.multiclass_hinge_loss(preds, target, num_classes, squared=False, multiclass_mode='crammer-singer', ignore_index=None, validate_args=False)[source]

Computes the mean Hinge loss typically used for Support Vector Machines (SVMs) for multiclass tasks

The metric can be computed in two ways. Either, the definition by Crammer and Singer is used:

Where

is the target class (where

is the target class (where  is the number of classes),

and

is the number of classes),

and  is the predicted output per class. Alternatively, the metric can

also be computed in one-vs-all approach, where each class is valued against all other classes in a binary fashion.

is the predicted output per class. Alternatively, the metric can

also be computed in one-vs-all approach, where each class is valued against all other classes in a binary fashion.Accepts the following input tensors:

preds(float tensor):(N, C, ...). Preds should be a tensor containing probabilities or logits for each observation. If preds has values outside [0,1] range we consider the input to be logits and will auto apply softmax per sample.target(int tensor):(N, ...). Target should be a tensor containing ground truth labels, and therefore only contain values in the [0, n_classes-1] range (except if ignore_index is specified).

Additional dimension

...will be flattened into the batch dimension.- Parameters

num_classes¶ (

int) – Integer specifing the number of classessquared¶ (

bool) – If True, this will compute the squared hinge loss. Otherwise, computes the regular hinge loss.multiclass_mode¶ (

Literal[‘crammer-singer’, ‘one-vs-all’]) – Determines how to compute the metricignore_index¶ (

Optional[int]) – Specifies a target value that is ignored and does not contribute to the metric calculationvalidate_args¶ (

bool) – bool indicating if input arguments and tensors should be validated for correctness. Set toFalsefor faster computations.

Example

>>> from torchmetrics.functional.classification import multiclass_hinge_loss >>> preds = torch.tensor([[0.25, 0.20, 0.55], ... [0.55, 0.05, 0.40], ... [0.10, 0.30, 0.60], ... [0.90, 0.05, 0.05]]) >>> target = torch.tensor([0, 1, 2, 0]) >>> multiclass_hinge_loss(preds, target, num_classes=3) tensor(0.9125) >>> multiclass_hinge_loss(preds, target, num_classes=3, squared=True) tensor(1.1131) >>> multiclass_hinge_loss(preds, target, num_classes=3, multiclass_mode='one-vs-all') tensor([0.8750, 1.1250, 1.1000])

- Return type