Calibration Error¶

Module Interface¶

- class torchmetrics.CalibrationError(n_bins=15, norm='l1', compute_on_step=None, **kwargs)[source]

Computes the Top-label Calibration Error Three different norms are implemented, each corresponding to variations on the calibration error metric.

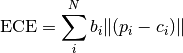

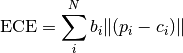

L1 norm (Expected Calibration Error)

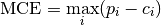

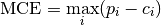

Infinity norm (Maximum Calibration Error)

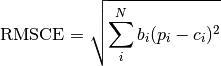

L2 norm (Root Mean Square Calibration Error)

Where

is the top-1 prediction accuracy in bin

is the top-1 prediction accuracy in bin  ,

,

is the average confidence of predictions in bin

is the average confidence of predictions in bin  , and

, and

is the fraction of data points in bin

is the fraction of data points in bin  .

.Note

L2-norm debiasing is not yet supported.

- Parameters

n_bins¶ (

int) – Number of bins to use when computing probabilities and accuracies.norm¶ (

str) – Norm used to compare empirical and expected probability bins. Defaults to “l1”, or Expected Calibration Error.debias¶ – Applies debiasing term, only implemented for l2 norm. Defaults to True.

compute_on_step¶ (

Optional[bool]) –Forward only calls

update()and returns None if this is set to False.Deprecated since version v0.8: Argument has no use anymore and will be removed v0.9.

kwargs¶ (

Dict[str,Any]) – Additional keyword arguments, see Advanced metric settings for more info.

Initializes internal Module state, shared by both nn.Module and ScriptModule.

- compute()[source]

Computes calibration error across all confidences and accuracies.

- Returns

Calibration error across previously collected examples.

- Return type

Tensor

Functional Interface¶

- torchmetrics.functional.calibration_error(preds, target, n_bins=15, norm='l1')[source]

Computes the Top-label Calibration Error

Three different norms are implemented, each corresponding to variations on the calibration error metric.

L1 norm (Expected Calibration Error)

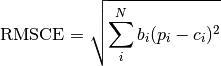

Infinity norm (Maximum Calibration Error)

L2 norm (Root Mean Square Calibration Error)

Where

is the top-1 prediction accuracy in bin

is the top-1 prediction accuracy in bin  ,

,

is the average confidence of predictions in bin

is the average confidence of predictions in bin  , and

, and

is the fraction of data points in bin

is the fraction of data points in bin  .

.- Parameters

- Return type