Cohen Kappa¶

Module Interface¶

- class torchmetrics.CohenKappa(num_classes, weights=None, threshold=0.5, compute_on_step=None, **kwargs)[source]

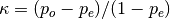

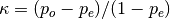

Calculates Cohen’s kappa score that measures inter-annotator agreement. It is defined as

where

is the empirical probability of agreement and

is the empirical probability of agreement and  is

the expected agreement when both annotators assign labels randomly. Note that

is

the expected agreement when both annotators assign labels randomly. Note that

is estimated using a per-annotator empirical prior over the

class labels.

is estimated using a per-annotator empirical prior over the

class labels.Works with binary, multiclass, and multilabel data. Accepts probabilities from a model output or integer class values in prediction. Works with multi-dimensional preds and target.

- Forward accepts

preds(float or long tensor):(N, ...)or(N, C, ...)where C is the number of classestarget(long tensor):(N, ...)

If preds and target are the same shape and preds is a float tensor, we use the

self.thresholdargument to convert into integer labels. This is the case for binary and multi-label probabilities or logits.If preds has an extra dimension as in the case of multi-class scores we perform an argmax on

dim=1.- Parameters

Weighting type to calculate the score. Choose from:

Noneor'none': no weighting'linear': linear weighting'quadratic': quadratic weighting

threshold¶ (

float) – Threshold for transforming probability or logit predictions to binary(0,1)predictions, in the case of binary or multi-label inputs. Default value of0.5corresponds to input being probabilities.compute_on_step¶ (

Optional[bool]) –Forward only calls

update()and returnsNoneif this is set toFalse.Deprecated since version v0.8: Argument has no use anymore and will be removed v0.9.

kwargs¶ (

Dict[str,Any]) – Additional keyword arguments, see Advanced metric settings for more info.

Example

>>> from torchmetrics import CohenKappa >>> target = torch.tensor([1, 1, 0, 0]) >>> preds = torch.tensor([0, 1, 0, 0]) >>> cohenkappa = CohenKappa(num_classes=2) >>> cohenkappa(preds, target) tensor(0.5000)

Initializes internal Module state, shared by both nn.Module and ScriptModule.

Functional Interface¶

- torchmetrics.functional.cohen_kappa(preds, target, num_classes, weights=None, threshold=0.5)[source]

Calculates Cohen’s kappa score that measures inter-annotator agreement.

It is defined as

where

is the empirical probability of agreement and

is the empirical probability of agreement and  is

the expected agreement when both annotators assign labels randomly. Note that

is

the expected agreement when both annotators assign labels randomly. Note that

is estimated using a per-annotator empirical prior over the

class labels.

is estimated using a per-annotator empirical prior over the

class labels.- Parameters

preds¶ (

Tensor) – (float or long tensor), Either a(N, ...)tensor with labels or(N, C, ...)where C is the number of classes, tensor with labels/probabilitiestarget¶ (

Tensor) –target(long tensor), tensor with shape(N, ...)with ground true labelsWeighting type to calculate the score. Choose from:

Noneor'none': no weighting'linear': linear weighting'quadratic': quadratic weighting

threshold¶ (

float) – Threshold value for binary or multi-label probabilities.

Example

>>> from torchmetrics.functional import cohen_kappa >>> target = torch.tensor([1, 1, 0, 0]) >>> preds = torch.tensor([0, 1, 0, 0]) >>> cohen_kappa(preds, target, num_classes=2) tensor(0.5000)

- Return type