Edit Distance¶

Module Interface¶

- class torchmetrics.text.EditDistance(substitution_cost=1, reduction='mean', **kwargs)[source]¶

Calculates the Levenshtein edit distance between two sequences.

The edit distance is the number of characters that need to be substituted, inserted, or deleted, to transform the predicted text into the reference text. The lower the distance, the more accurate the model is considered to be.

Implementation is similar to nltk.edit_distance.

As input to

forwardandupdatethe metric accepts the following input:preds(Sequence): An iterable of hypothesis corpustarget(Sequence): An iterable of iterables of reference corpus

As output of

forwardandcomputethe metric returns the following output:eed(Tensor): A tensor with the extended edit distance score. If reduction is set to'none'orNone, this has shape(N, ), whereNis the batch size. Otherwise, this is a scalar.

- Parameters:

substitution_cost¶ (

int) – The cost of substituting one character for another.reduction¶ (

Optional[Literal['mean','sum','none']]) –a method to reduce metric score over samples.

'mean': takes the mean over samples'sum': takes the sum over samplesNoneor'none': return the score per sample

kwargs¶ (

Any) – Additional keyword arguments, see Advanced metric settings for more info.

- Example::

Basic example with two strings. Going from “rain” -> “sain” -> “shin” -> “shine” takes 3 edits:

>>> from torchmetrics.text import EditDistance >>> metric = EditDistance() >>> metric(["rain"], ["shine"]) tensor(3.)

- Example::

Basic example with two strings and substitution cost of 2. Going from “rain” -> “sain” -> “shin” -> “shine” takes 3 edits, where two of them are substitutions:

>>> from torchmetrics.text import EditDistance >>> metric = EditDistance(substitution_cost=2) >>> metric(["rain"], ["shine"]) tensor(5.)

- Example::

Multiple strings example:

>>> from torchmetrics.text import EditDistance >>> metric = EditDistance(reduction=None) >>> metric(["rain", "lnaguaeg"], ["shine", "language"]) tensor([3, 4], dtype=torch.int32) >>> metric = EditDistance(reduction="mean") >>> metric(["rain", "lnaguaeg"], ["shine", "language"]) tensor(3.5000)

- plot(val=None, ax=None)[source]¶

Plot a single or multiple values from the metric.

- Parameters:

val¶ (

Union[Tensor,Sequence[Tensor],None]) – Either a single result from calling metric.forward or metric.compute or a list of these results. If no value is provided, will automatically call metric.compute and plot that result.ax¶ (

Optional[Axes]) – An matplotlib axis object. If provided will add plot to that axis

- Return type:

- Returns:

Figure and Axes object

- Raises:

ModuleNotFoundError – If matplotlib is not installed

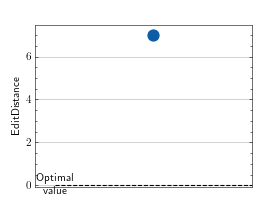

>>> # Example plotting a single value >>> from torchmetrics.text import EditDistance >>> metric = EditDistance() >>> preds = ["this is the prediction", "there is an other sample"] >>> target = ["this is the reference", "there is another one"] >>> metric.update(preds, target) >>> fig_, ax_ = metric.plot()

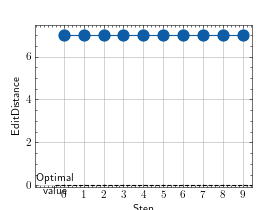

>>> # Example plotting multiple values >>> from torchmetrics.text import EditDistance >>> metric = EditDistance() >>> preds = ["this is the prediction", "there is an other sample"] >>> target = ["this is the reference", "there is another one"] >>> values = [ ] >>> for _ in range(10): ... values.append(metric(preds, target)) >>> fig_, ax_ = metric.plot(values)

Functional Interface¶

- torchmetrics.functional.text.edit_distance(preds, target, substitution_cost=1, reduction='mean')[source]¶

Calculates the Levenshtein edit distance between two sequences.

The edit distance is the number of characters that need to be substituted, inserted, or deleted, to transform the predicted text into the reference text. The lower the distance, the more accurate the model is considered to be.

Implementation is similar to nltk.edit_distance.

- Parameters:

preds¶ (

Union[str,Sequence[str]]) – An iterable of predicted texts (strings).target¶ (

Union[str,Sequence[str]]) – An iterable of reference texts (strings).substitution_cost¶ (

int) – The cost of substituting one character for another.reduction¶ (

Optional[Literal['mean','sum','none']]) –a method to reduce metric score over samples.

'mean': takes the mean over samples'sum': takes the sum over samplesNoneor'none': return the score per sample

- Raises:

ValueError – If

predsandtargetdo not have the same length.ValueError – If

predsortargetcontain non-string values.

- Return type:

- Example::

Basic example with two strings. Going from “rain” -> “sain” -> “shin” -> “shine” takes 3 edits:

>>> from torchmetrics.functional.text import edit_distance >>> edit_distance(["rain"], ["shine"]) tensor(3.)

- Example::

Basic example with two strings and substitution cost of 2. Going from “rain” -> “sain” -> “shin” -> “shine” takes 3 edits, where two of them are substitutions:

>>> from torchmetrics.functional.text import edit_distance >>> edit_distance(["rain"], ["shine"], substitution_cost=2) tensor(5.)

- Example::

Multiple strings example:

>>> from torchmetrics.functional.text import edit_distance >>> edit_distance(["rain", "lnaguaeg"], ["shine", "language"], reduction=None) tensor([3, 4], dtype=torch.int32) >>> edit_distance(["rain", "lnaguaeg"], ["shine", "language"], reduction="mean") tensor(3.5000)